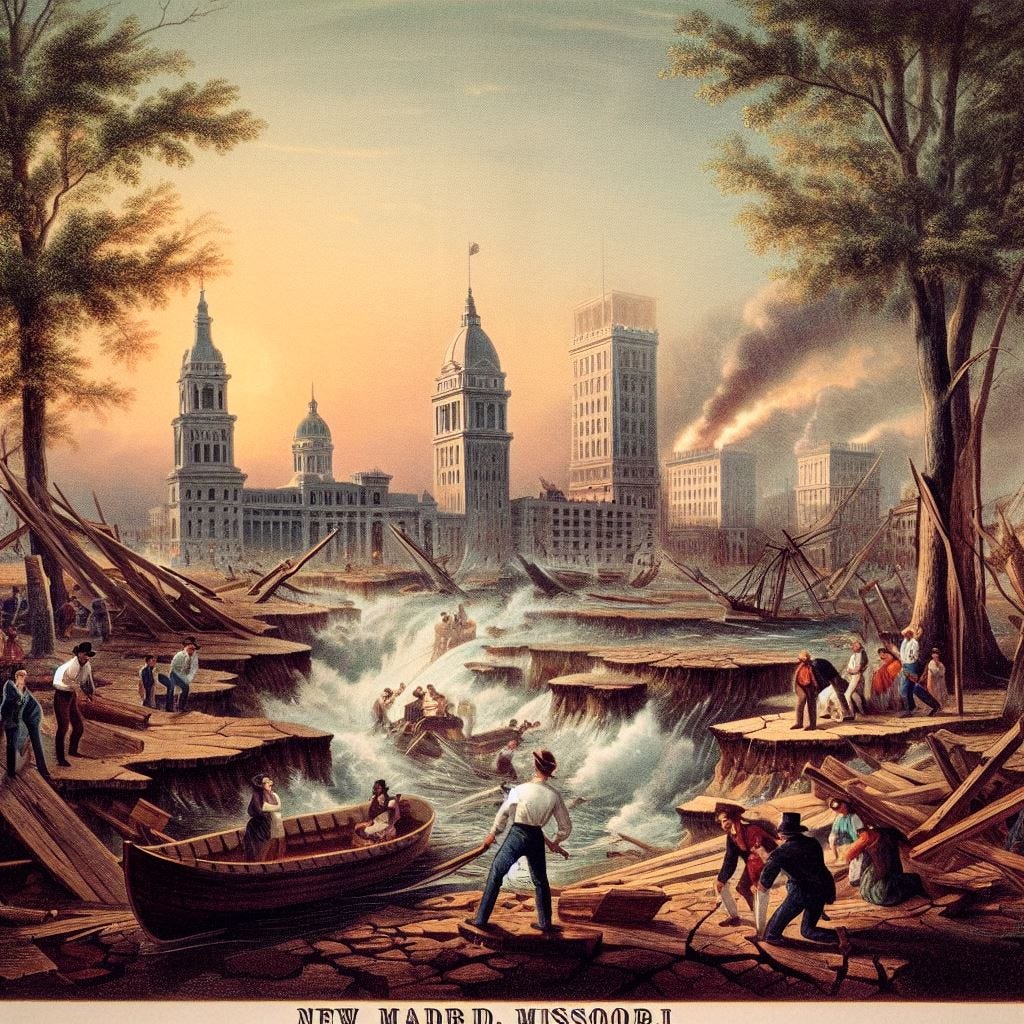

Vague Instructions Yield Mixed Results (New Madrid Earthquake)

Learning to Be More Specific With AI-Generated Prompts

I’ve been borderline obsessed with Bing Image Creator these last couple of days. Today I wanted it to generate some images of the Great (as in very large) New Madrid Earthquake of 1811/1812 as well as some images of the California Gold Rush in the area where I grew up (Calaveras County, California). This post will cover the first event; the gold mining will follow in another post.

I found with the first set of generated images, though, that I wasn’t being specific enough with my prompts, and had to refine them a couple of times each to get close to what I really wanted.

Here are some examples where you can see, as I got more specific with my instructions to the AI robot (software), it also got more specific with its results; this reminds you, perhaps, of the old saying regarding computers, “Garbage In, Garbage Out” (GIGO). In this case, it’s “Vague In, Vague Out,” or VIVO.

Another software expression is “stepwise refinement.” You may need this when “training” your AI robot.

First, I entered the prompt “new madrid earthquake 1811” and got these images:

I wonder if the AI robot (I’ll call him Albert Immerwach) thought New Madrid was in Spain? With that possibility in mind, I refined the prompt to “new madrid missouri earthquake 1811 and 1812” and got these:

Wanting less images of St. Louis (if that’s the city being shown, which it probably is, as it is the State’s largest) and more of the rural parts on the Mississippi River, I finally refined the prompt further to “new madrid missouri earthquake mississippi river 1811 and 1812” and got these:

It still shows tall mountains in the background in one of the images, which is wrong for Missouri and in fact all of the Mississippi Valley. There are hills in “them thar hills,” but no mountains. And Albert still insisted on having a lot urban scenescapes.